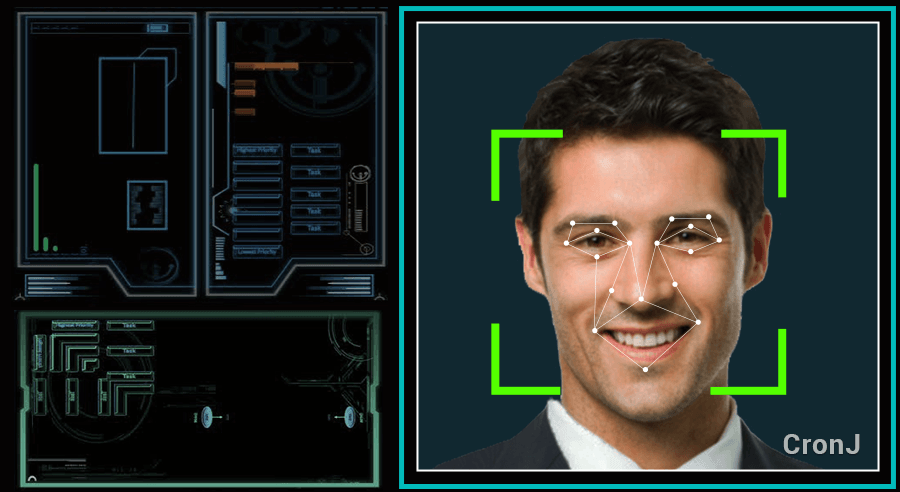

Face Recognition

A facial recognition system is a technology capable of identifying or verifying a person from a digital image or a video frame from a video source. There are multiple methods in which facial recognition systems work, but in general, they work by comparing selected facial features from given image with faces within a database.

Here we will follow the following steps:

- Create a dataset of one person's face, say 100 samples.

- Use somple suitable Machine learning algorithms to train model.

- Use trained model for recognition face.

Let’s look at an code :

Create Training Data

# Import the modules

import cv2

import numpy as np

# Load HAAR face classifier

face_classifier = cv2.CascadeClassifier( 'haarcascade_frontalface_default.xml')

# Function to extract face from frame

def face_extractor(img):

# Function detects faces and returns the cropped face

# If no face detected, it returns the input image

gray = cv2.cvtColor( img, cv2.COLOR_BGR2GRAY)

faces = face_classifier.detectMultiScale( gray, 1.3, 5)

if faces is ():

return None

# Crop all faces found

for (x,y,w,h) in faces:

cropped_face = img[y:y+h, x:x+w]

return cropped_face

# Initialize Webcam

cap = cv2.VideoCapture(0)

count = 0

# Collect 100 samples of your face from webcam input

while True:

ret, frame = cap.read()

# If face is found in frame

if face_extractor(frame) is not None:

count += 1

face = cv2.resize(face_extractor(frame), (200, 200))

face = cv2.cvtColor(face, cv2.COLOR_BGR2GRAY)

# Save file in specified directory with unique name

file_name_path = 'face/' + str(count) + '.jpg'

cv2.imwrite(file_name_path, face)

# Put count on images and display live count

cv2.putText(face, str(count), (50, 50), cv2.FONT_HERSHEY_COMPLEX, 1, (0,255,0), 2)

cv2.imshow('Face Cropper', face)

else:

print("Face not found")

pass

if cv2.waitKey(1) == 13 or count == 100: #13 is the Enter Key

break

# After collecting samples, Release and destroyAllWindows

cap.release()

cv2.destroyAllWindows()

print("Collecting Samples Complete")

Train Model

# Import the modules

import cv2

import numpy as np

from os import listdir

import pickle

from os.path import isfile, join

# Get the training data we previously made

data_path = 'face/'

onlyfiles = [f for f in listdir(data_path) if isfile(join(data_path, f))]

# Create arrays for training data and labels

Training_Data, Labels = [], []

# Open training images in our datapath

# Create a numpy array for training data

for i, files in enumerate(onlyfiles):

image_path = data_path + onlyfiles[i]

images = cv2.imread(image_path, cv2.IMREAD_GRAYSCALE)

Training_Data.append( np.asarray( images, dtype=np.uint8))

Labels.append(i)

# Create a numpy array for both training data and labels

Labels = np.asarray(Labels, dtype=np.int32)

# Initialize facial recognizer

model = cv2.face.LBPHFaceRecognizer_create()

# NOTE: For OpenCV 3.0 use cv2.face.createLBPHFaceRecognizer()

Training_Data), np.asarray(Labels))

print("Model trained sucessefully")

# Let's train our model

model.train(np.asarray( Training_Data), np.asarray(Labels))

print("Model trained sucessefully")

Run Our Facial Recognition

# Import the modules

import cv2

import numpy as np

import time

# Load HAAR face classifier

face_classifier = cv2.CascadeClassifier( 'haarcascade_frontalface_default.xml')

# Function to detect face

def face_detector(img, size=0.5):

# Convert image to grayscale

gray = cv2.cvtColor(img,cv2.COLOR_BGR2GRAY)

faces = face_classifier.detectMultiScale( gray, 1.3, 5)

# If face not found return blank region

if faces is ():

return img, []

# Obtain Region of face

for (x,y,w,h) in faces:

cv2.rectangle(img, (x,y), (x+w,y+h), (0,255,255),2)

roi = img[y:y+h, x:x+w]

roi = cv2.resize(roi, (200, 200))

return img, roi

# Open Webcam

cap = cv2.VideoCapture(0)

while True:

ret, frame = cap.read()

image, face = face_detector(frame)

try:

face = cv2.cvtColor(face, cv2.COLOR_BGR2GRAY)

# Pass face to prediction model

# "results" comprises of a tuple containing the label and the confidence value

results = model.predict(face)

# Tell about the confidence of user.

if results[1] < 500:

confidence = int( 100 * (1 - (results[1])/400) )

display_string = str(confidence) + '% Confident it is User'

cv2.putText(image, display_string, (100, 120), cv2.FONT_HERSHEY_COMPLEX, 1, (255,120,150), 2)

# If confidence is greater than 90 then the face will be recognized.

if confidence > 90:

cv2.putText(image, "Unlocked", (250, 450), cv2.FONT_HERSHEY_COMPLEX, 1, (0,255,0), 2)

cv2.imshow('Face Recognition', image )

# If confidence is less than 90 then the face will not be recognized.

else:

cv2.putText(image, "Locked", (250, 450), cv2.FONT_HERSHEY_COMPLEX, 1, (0,0,255), 2)

cv2.imshow('Face Recognition', image )

# Raise exception in case, no image is found

except:

cv2.putText(image, "No Face Found", (220, 120) , cv2.FONT_HERSHEY_COMPLEX, 1, (0,0,255), 2)

cv2.putText(image, "Locked", (250, 450), cv2.FONT_HERSHEY_COMPLEX, 1, (0,0,255), 2)

cv2.imshow('Face Recognition', image )

pass

# Breaks loop when enter is pressed

if cv2.waitKey(1) == 13: #13 is the Enter Key

break

# Release and destroyAllWindows

cap.release()

cv2.destroyAllWindows()

Note : Train Model and Run Our Facial Recognition should be written on same file.

Our Output image will look like this: